Overview

oneTrack is an application designed to help teaching staff understand where students are engaging and disengaging with their teaching material through anonymously understanding their emotions and attentiveness throughout sessions. It was developed at JISC's CAN Hackathon at the Open University.Project

The project was built around our own experiences of lectures, and seeing students disengage. We thought we’d work around JISC’s intelligent campus strand, creating a solution to allow staff to understand where students are engaging and why engagement could be dropping across lectures.

This conference was about the Change Agents Network, a network of staff and students working in partnership to support curriculum enhancement and innovation across higher and further education globally. We decided to target our entry to this to create a product that would help staff and students alike to improve the lecture experience.

How it works

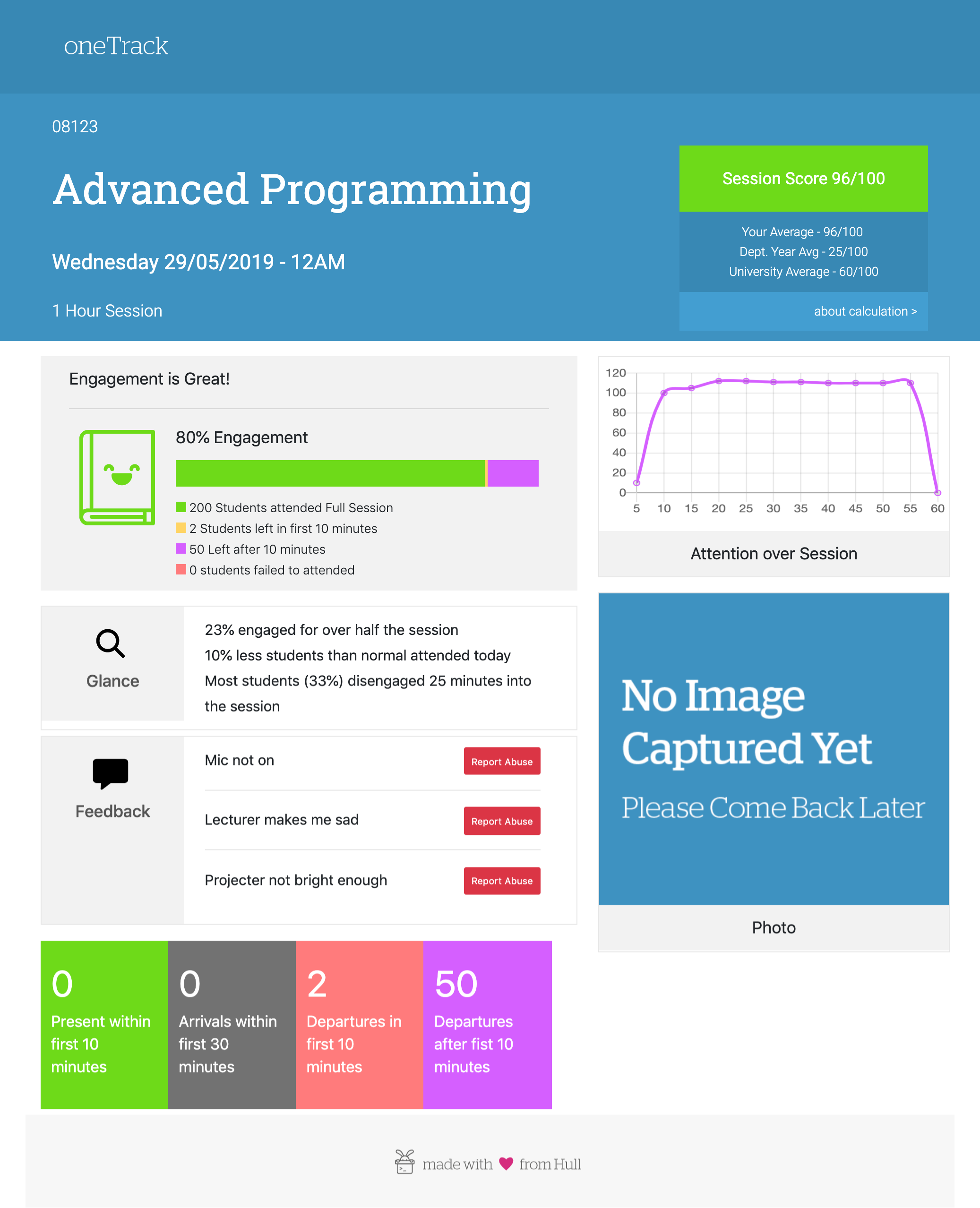

The client side captures images from a camera pointing towards the audience and sends it to Azure’s face recognition API, this returns a JSON array of statistics of people in the picture and co-ordinates of faces, the frame is marked up with red squares for where people are located through openCV and returned in a web session.

The server application also uses the data from the frames to calculate statistics across the session, to work out the averages for attentiveness, engagement and score the session before storing it into the database. The front-end then uses ChartJS and some friendly graphs to portray this information to the user.

If this was being used in production, we’d also link this to Panopto (or other lecture capture software) to overlay attentiveness over the stream to allow the member of staff to understand which parts of the session resulted in increased and reduced engagement.

Tools Used

- The client application was a .NET Core application that collected images from a camera or webcam attached to a device

- The server-side is also a .NET Core application

- The application uses Azure’s face-recognition API

Video

JISC Logo is courtesy of JISC and Open University branding is copyright Open University.

CAN 2019 brand is a partnership between JISC and Open University.